The Threat of Deepfake: How AI-Generated Content is Making Scammers More Successful

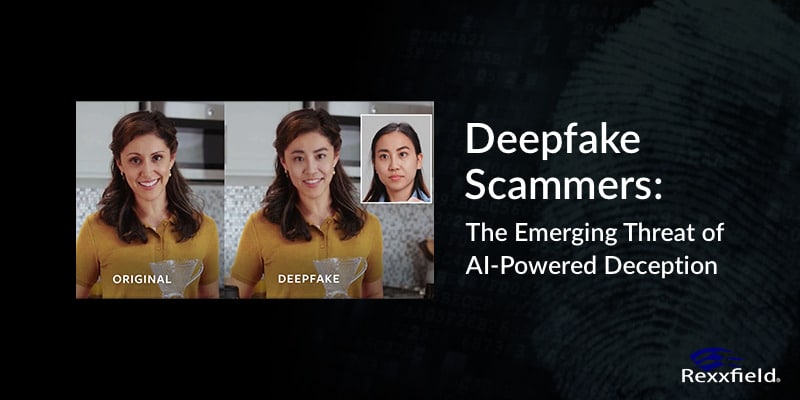

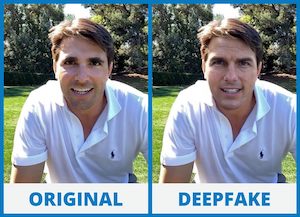

In recent years, deepfake technology has emerged as a major concern for cybersecurity experts and individuals alike. Deepfakes are computer-generated images, videos, and audio recordings created using AI and machine learning to manipulate and impersonate human voices and faces. Cybercriminals are using them more and more to scam and defraud people.

We help victims by tracing the individual responsible for publishing, distributing, or using deepfake material to extract money from victims.

But more about that later. Let’s first explore how deepfake material makes AI-powered deception more powerful and scammers more successful.

What is Deepfake Technology?

How Deepfake is Amplifying Scammers’ Deception

The use of deepfake technology to scam people involves creating realistic-looking images or videos of people. These can then be used to manipulate or deceive individuals into thinking that they are interacting with a genuine individual. This can take various forms, such as impersonating a celebrity, a family member, or a colleague. Cybercriminals can also use deepfakes to impersonate high-ranking officials, such as CEOs or government officials. This way they can gain access to sensitive information or defraud individuals.

One of the most common types of “deepfake” scams involves fraudsters creating fake videos or audio recordings of individuals. Often these are of famous personalities or celebrities, endorsing a particular product or service. These videos are then shared on social media or sent through email to unsuspecting individuals who are then convinced to invest in the product or service. This type of scam is particularly effective because people often trust celebrities and public figures, and are therefore more likely to believe that the endorsement is genuine.

Another type of deepfake scam involves cybercriminals impersonating family members or friends and asking for money or sensitive information. This is often done through social media or messaging apps, where the scammer will create a fake profile or impersonate an existing one. The scammer will then use the fake profile to send messages to the victim, claiming that they are in an emergency situation and need money urgently.

One example is how Ruth Card received a phone call from a man who sounded just like her grandson. He said he was in jail, and urgently needed money for bail. Ruth scrambled whatever money he needed together to help him. Unfortunately, this was not her grandson, but a scammer who mimicked the voice of her grandson to trick Ruth into giving money.

How Deepfake Scams use FaceTime calls to Trick Victims Into Giving Money

One example of scammers using deepfake technology is through fake FaceTime calls. Scammers will often impersonate a person known to the victim, such as a friend or family member, and then manipulate their voice using deepfake technology to make it sound like the person they are impersonating. They will then request money from the victim, claiming they are in urgent need of financial assistance. In some cases, the scammers will also use deepfake technology to create a video feed that appears to show the person they are impersonating. This can make the scam even more convincing, as the victim believes they are talking to the real person.

These types of scams can be highly effective because they rely on the victim’s trust in the person they are impersonating. Victims may be more likely to believe that the call is genuine if it appears to come from someone they know, and if the person’s voice and appearance seem authentic.

To avoid falling victim to deepfake FaceTime scams, it’s important to verify the identity of the person you are speaking with before providing any sensitive information or financial assistance. This can include asking the person questions that only they would know the answer to, or contacting them through a different communication method to confirm their identity. It’s also important to be cautious when receiving unsolicited calls or messages, and to avoid providing any personal or financial information unless you are certain that the communication is genuine.

Combating Deepfake Scams: How Cyber Investigators Are Tackling AI-Powered Deception

One of the main challenges for cyber investigators is that deepfakes can be difficult to detect. These synthetic media are created using artificial intelligence and machine learning algorithms that manipulate images, videos, and audio recordings to make them appear real. The manipulation can be very subtle, making it challenging to distinguish between a deepfake and a genuine recording.

To tackle this issue, cyber investigators are using a range of techniques, including AI and machine learning algorithms, to detect deepfakes. These techniques involve analyzing the data and looking for patterns that indicate that the media has been manipulated. This can include analyzing the visual or audio characteristics of the recording, as well as using advanced software tools to identify anomalies in the data.

Another way cyber investigators are combating deepfake scams is through public awareness campaigns. Picdo.org is one example through which we educate people on scams and how they can protect themselves. Many people are still unaware of the dangers of deepfakes and how they can be used to deceive them. By educating the public about the risks of deepfakes and how to spot them, cyber investigators can help prevent people from falling victim to these scams.

Moreover, cyber investigators are working closely with social media platforms to identify and remove deepfakes from their platforms. This involves using automated systems and human moderators to identify and remove any content that violates the platform’s policies. Social media platforms are also investing in technology to detect deepfakes automatically.

Cyber investigators are playing a vital role in combatting deepfake scams by using advanced techniques to detect deepfakes and by raising public awareness of the risks of deepfakes.

Our cyber investigators can also trace deepfakes to identify the scammers involved.

I need help tracing deepfake scams

How to Protect Yourself from Scams

To protect against deepfake scams, individuals should take the following precautions:

- Be cautious when receiving messages or requests for information, especially if they are from people you do not know well.

- Verify the identity of the person sending the message before responding or providing any sensitive information.

- Never use the same password twice, and use random character combinations.

- Set up two-factor authentication to protect your online accounts.

- Use reputable security software and keep it up to date.

- Be aware of the risks of deepfakes and educate yourself on how to identify them.